NoW Challenge

Evaluation metric

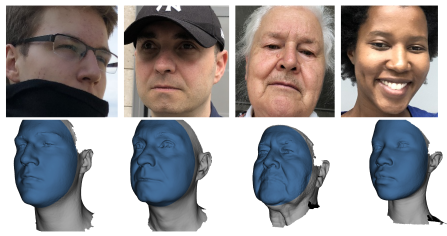

Given a single monocular image, the challenge is to reconstruct a 3D face. Since the predicted meshes occur in different local coordinate systems, the reconstructed 3D mesh is rigidly aligned (i.e., rotation, translation, and optional scaling) to the scan using a set of corresponding landmarks between the prediction and the scan. We further perform a rigid alignment based on the scan-to-mesh distance (which is the absolute distance between each scan vertex and the closest point in the mesh surface) between the ground truth scan, and the reconstructed mesh using the landmarks alignment as initialization. See the NoW evaluation repository for implementation details. We then use the scan-to-mesh distance after rigid alignment as reported evaluation metric.

Participation

To participate in the challenge, download the test images from the Downloads page after registering in the website. Upload the reconstruction results and selected landmarks as instructed on the Downloads page. The error metrics are then computed and returned. Note that we do not provide the ground truth scans for the test data.

For the time being, the participants can forward their results (a Dropbox link containing a zip file) to the email address ringnet@tue.mpg.de. We will compute the error metric and resend the scan-to-mesh distances computed for each vertex of the scan (check the paper for details) and the statistics to the registered email address of the participant. Results submitted to participate in the NoW challenge are kept confidential and are not shared with others until requested by the participants. To avoid overfitting to the test set, we do not allow more than 5 submissions for one method.

To consider a method being ranked on the NoW website, specify "public submission" in the subject of the email. In this case, additionally provide an acronym or method name and the bibtex information about the method, which should be considered in the leaderboard table.

Referencing the NoW challenge

@inproceedings{RingNet:CVPR:2019,

title = {Learning to Regress {3D} Face Shape and Expression from an Image without {3D} Supervision},

author = {Sanyal, Soubhik and Bolkart, Timo and Feng, Haiwen and Black, Michael},

booktitle = {Proceedings IEEE Conf. on Computer Vision and Pattern Recognition (CVPR)},

month = jun,

pages = {7763--7772},

year = {2019},

month_numeric = {6}

}